Our tech future is not inevitable. It’s shaped by human agency.

Read our Year in Review, our method for tackling thorny tech & society issues, and more!

👋We hope you are having a wonderful holiday season! In today’s newsletter, you’ll see our Year in Review, method for tackling thorny tech & society issues, two upcoming gatherings happening in NYC (we’re setting up ones in London, SF, and additional locations), and videos from two recently held livestreams.

We also did a write-up about our child safety and Safety by Design activities, released a facilitator guide for course one of our series of five Responsible AI courses, and are continuing to gather feedback from our newly-released Responsible AI Impact Report.

All Tech Is Human is busy lining up our 2026 activities; as always, reach out our way with your ideas. Now, onto the newsletter! 👇

Read about our 2025 accomplishments and where we are headed!

Thorny tech & society issues are systemic problems.

That’s why All Tech Is Human has always taken a whole-of-ecosystem approach. Our wide range of field-building activities provide the crucial infrastructure that supports the Responsible Tech movement.

In our Year in Review, you’ll read about all of the ways that All Tech Is Human has uniquely served as a catalyst, convener, and connector. Noted for being the organization that has woven together the modern Responsible Tech movement, All Tech Is Human’s Year in Review also outlines how we can continue playing a leadership role in 2026 and beyond. This involves a paradigm shift in how we adjust to a future that is perpetually uncertain.

🤔Do you know of others who should be connected with All Tech Is Human? Here is our LinkedIn post about the Year in Review you can share or tag others.

We released a mini-guide into our method for tackling thorny tech & society issues

In 2026, we will be creating a repeatable blueprint for tackling complex tech & society issues that can be utilized by companies, governments, and organizations. In our newly-released mini-guide, we describe what we mean by a “thorny issue,” our HUMAN ways of approaching problems, and the general process for solutions to address complexity.

💡Our HUMAN way of thinking:

Holistic: observe and analyze the problem in the context of its occurrence

United: bring together the ideas, people, and organizations affected by the problems

Multiplied: ensure that the approach is multistakeholder and multidisciplinary

Adaptable: create flexible solutions that are adaptable and inclusive of the audience

Nuanced: maintain a never-done frame of mind

Suggested approach:

Build a multistakeholder team

Identify relevant frameworks

Diagnose the issue

Ideate solutions

Test & repeat

Establish governance

Responsible AI Impact Report

In our inaugural Responsible AI Impact Report, we discuss the most urgent risks, emerging safeguards, and public-interest solutions, and provide a roadmap for how we will shape how AI impacts society in the year ahead. We examine the state of Responsible AI (RAI) throughout 2025 and highlight what we consider to be some of the most impactful contributions made by civil society organizations this year to enrich this broad and dynamic field.

Our central area of focus: who determines the purpose of AI and the kinds of lives it will shape?

“An AI-enabled future grounded in human dignity depends on institutions that can govern powerful technologies with a commitment to the public good. When societies build this kind of civic architecture, people gain the ability to direct technological development rather than be shaped by it. This report highlights how that architecture is emerging through civil society’s work: accountable standards, rigorous evaluations, provenance systems that reinforce information integrity, and safeguards that respond to prominent harms. Together, these efforts outline a path toward a digital world that strengthens democratic agency and reflects the values we choose to uphold.” -Vilas Dhar, President of The Patrick J. McGovern Foundation

Related Resource: Vilas Dhar, quoted above, recently appeared on our livestream.

Our recent livestream (above) highlighted how Responsible AI has rapidly shifted into mainstream public concern, driven by technological advances, growing societal impacts, and a patchwork of evolving governance efforts.

Panelists from Data & Society (Janet Haven), Partnership on AI (Stephanie Bell), and Mozilla Foundation (Zeina Abi Assy) emphasized that while federal protections in the U.S. have weakened, state-level action, global regulatory momentum, and heightened civic engagement are creating new pathways for accountability. This conversation was moderated by ATIH’s Rebekah Tweed, lead author for the Responsible AI Impact Report discussed throughout.

Our recent livestream (above) explored a troubling paradox: while most Americans believe that climate change is real and human-caused, online misinformation continues to erode public trust in climate science. Why doesn’t climate misinformation trigger platform safety systems? How can we change that?

We had panelists Alice Goguen Hunsberger (Head of Trust & Safety, Musubi), Theodora Skeadas (Head of Red Teaming, HumaneIntelligence + ATIH advisor), Pinal Shah (Senior Advisor, CAS Strategies), Rod Abhari (Research Fellow, Center of Media Psychology and Social Influence, Northwestern University), and Melissa Aronczyk (Professor of Media Studies in the School of Communication and Information, Rutgers University). ATIH’s Sandra Khalil moderated.

This challenge is compounded by:

political polarization

restructuring within Trust and Safety teams

difficulty of moderating nuanced or indirect climate claims

All Tech Is Human creates a “big tent” that naturally weaves together all stakeholders

Responsible Tech Mixer + Live Podcast Taping

Join All Tech Is Human for the first Responsible Tech Mixer of 2026! We’re pleased to partner with our friends at P&T Knitwear for a fireside chat (and live podcast taping) and networking session on January 12th in NYC, as part of All Tech Is Human’s Responsible Tech Author Series.

The fireside chat features Hilke Schellmann (Associate Professor of Journalism at New York University) in conversation with Jennifer Strong (audio journalist and creator and host of the SHIFT podcast) about Hilke’s book The Algorithm and more.

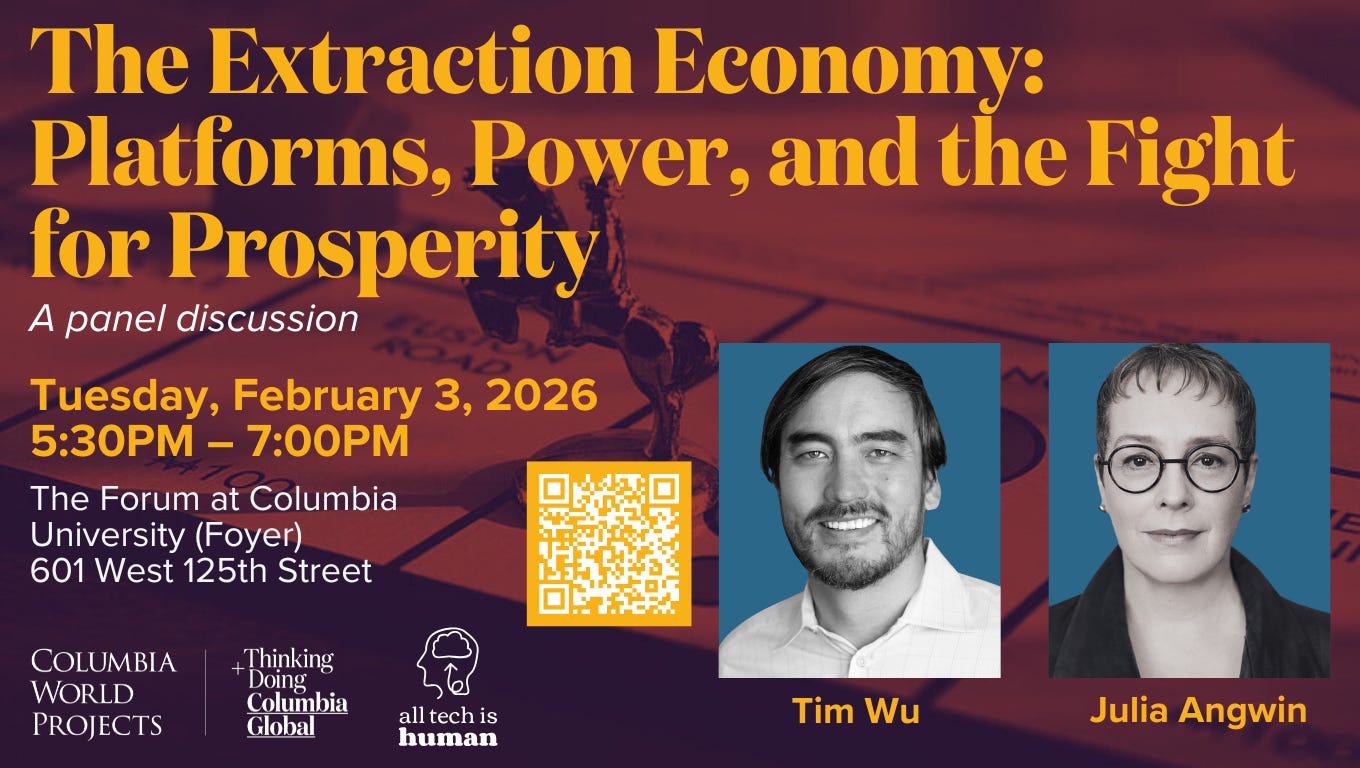

Hosted by Columbia World Projects and in collaboration with All Tech Is Human, the discussion will center around questions such as:

How did a small set of technology platforms rise to command our attention, our data, and our economy?

What has this concentration of power cost us in terms of innovation, prosperity, and democratic possibility?

This dynamic in-person conversation between Tim Wu (author of The Age of Extraction, Columbia Law School professor and former White House official) and Julia Angwin (award-winning investigative journalist and founder of Proof News), will dig into how platform power has transformed whole sectors of the economy, how emerging AI systems may accelerate inequality, and what bold legal, institutional, and civic interventions are needed to build a more democratic digital future.

Be on the lookout for our rapid response report on AI companions arriving in early 2026.

✍️We are continuing to collect survey responses to assess public perceptions, hopes, fears, risks, expectations, and experiences with AI Companions.

🗣️On December 15th, our recent Princeton University Research Fellow Rose Guingrich, who helped create our AI Companions resources this year and moderated a related livestream, will be addressing members of the US Congress.

Red carpet interviews by AI for All Tomorrows—recorded live at the Responsible Tech Summit

💙 We wish you a happy holiday season and and joyful New Year! Thank you for your participation that has powered All Tech Is Human.

📖 Time on your hands over the holidays? Pick up a book from our curated Responsible Tech Reading List.

⭐ Our projects & links | Our network | Email us | Donate to ATIH | Our mission

🦜 Looking to chat with others in Responsible Tech after reading our newsletter? Join the conversations happening on our Slack (sign in | apply).

💪 All Tech Is Human is a nonprofit organization dedicated to building a more responsible, inclusive, and ethical tech future by uniting a broad range of people and ideas across civil society, government, industry, and academia. Our whole-of-ecosystem approach brings together technologists, academics, civil society leaders, and everyday individuals to collaboratively address complex challenges around AI governance, digital rights, platform accountability, and the broader social impacts of technology.